Head full of Image Processing and Vision in the last day of Semester 3 to cover up the whole syllabus from 8.30 AM to the evening. At the end we had to present a little application we had to implemented on following topics.

1. Vehicle Number Plate Detection and Recognition

2. Eye Detection and Sleepiness detection

3. Steganography

4. Panoramic scene creation

5. Human face matching using SIFT/SURF features.

6. Palm based mobile authentication using Scale Invariant Features (eg. SIFT, SURF)

7. Suit recognition of a card pack.

8. Video compression using similarity of frames

9. Eye based mobile interface

10. Expression detection happy, sad, angry.

11. Line detection of an image

12. Any other image based application (need to get approval).

So this is about Line Detection of an Image which is the easiest among all. :) This was implemented by using the library OpenCV for Java in the Eclipse environment. Addition to the jar file library, it comes with a DLL file. So you have to follow its tutorial to find out how to setup your Eclipse environment. Even though it works perfectly in Eclipse after configuration, still I couldn't been able to findout a way to export the application as a runnable jar due to this DLL issue. (Please direct me if you find a properway :) )

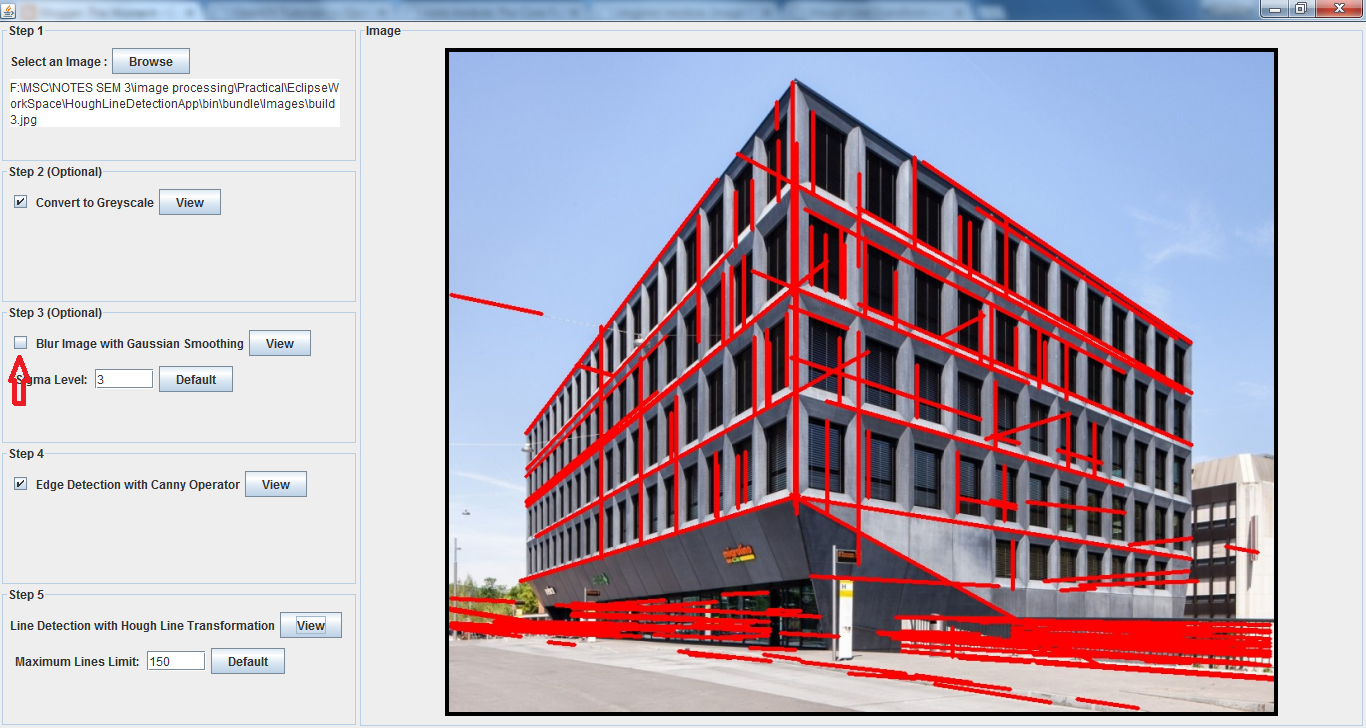

Most of the important facts about the technique which used to do the line detection is explained in the slide. Therefore here I will give you few of the screenshots of the application.

Load an image to the application

Images can be either *.jpg, *jpeg or an *.png formats. OpenCV handle these images very easily. I didn't had time to search how to deal with other image formats like *.tiff and *.gif. Hope there is a way to do with them too with a little bit of more coding.

All of the techniques used below are in more detail in the OpenCV turorial for image processing. Have a check in there.

Line Detection

What you see with red colored in the image are lines which have been detected. I limited the lines upto a certain limit. Because sometimes if you do not optimize your image as a better image feed to the Hough line transformation (Not Blurring the image), then it would detect unnecessary lines too. So better to have a limit. See the below screenshot that captured without using the blurring step and see how the lines have been detected unnecessarily.

Edge Detection

Every image cannot be feed into the Hough transformation. You have to feed an edge detected image. Where the white line represent the edges and black area shows the non-edges. Then what happened is described in the slides.

Blurring the Image

The edge detection plays a important role in here. If we didn't do a good edge detection then the line detection would totally go wrong. So first thing you have to do is optimize the image that feed into the edge detection. One thing you could do is remove the effect of the noisy pixels. That can be done with blurring the image.

To blur the image you have to findout a good sigma value for the blurring. That means how much of blurring would do a good job in edge detection. Well.... too much of blurring would give the following result.

So don't use too much of blurring. Also you had seen what would happened if you don't use the blurring. Therefore best thing to do is use blurring, with less amount. In OpenCV you do not necessarily need to specify the Kernal size 3 x 3 or 5 x 5.... It would automatically select according to your sigma level.

Grayscale

With the results I got this option is really not necessary. But sometimes you may find it valuable.

Finally, let's try another image of a road.

1. Vehicle Number Plate Detection and Recognition

2. Eye Detection and Sleepiness detection

3. Steganography

4. Panoramic scene creation

5. Human face matching using SIFT/SURF features.

6. Palm based mobile authentication using Scale Invariant Features (eg. SIFT, SURF)

7. Suit recognition of a card pack.

8. Video compression using similarity of frames

9. Eye based mobile interface

10. Expression detection happy, sad, angry.

11. Line detection of an image

12. Any other image based application (need to get approval).

So this is about Line Detection of an Image which is the easiest among all. :) This was implemented by using the library OpenCV for Java in the Eclipse environment. Addition to the jar file library, it comes with a DLL file. So you have to follow its tutorial to find out how to setup your Eclipse environment. Even though it works perfectly in Eclipse after configuration, still I couldn't been able to findout a way to export the application as a runnable jar due to this DLL issue. (Please direct me if you find a properway :) )

Most of the important facts about the technique which used to do the line detection is explained in the slide. Therefore here I will give you few of the screenshots of the application.

Load an image to the application

Images can be either *.jpg, *jpeg or an *.png formats. OpenCV handle these images very easily. I didn't had time to search how to deal with other image formats like *.tiff and *.gif. Hope there is a way to do with them too with a little bit of more coding.

All of the techniques used below are in more detail in the OpenCV turorial for image processing. Have a check in there.

Line Detection

What you see with red colored in the image are lines which have been detected. I limited the lines upto a certain limit. Because sometimes if you do not optimize your image as a better image feed to the Hough line transformation (Not Blurring the image), then it would detect unnecessary lines too. So better to have a limit. See the below screenshot that captured without using the blurring step and see how the lines have been detected unnecessarily.

Edge Detection

Every image cannot be feed into the Hough transformation. You have to feed an edge detected image. Where the white line represent the edges and black area shows the non-edges. Then what happened is described in the slides.

Blurring the Image

The edge detection plays a important role in here. If we didn't do a good edge detection then the line detection would totally go wrong. So first thing you have to do is optimize the image that feed into the edge detection. One thing you could do is remove the effect of the noisy pixels. That can be done with blurring the image.

To blur the image you have to findout a good sigma value for the blurring. That means how much of blurring would do a good job in edge detection. Well.... too much of blurring would give the following result.

So don't use too much of blurring. Also you had seen what would happened if you don't use the blurring. Therefore best thing to do is use blurring, with less amount. In OpenCV you do not necessarily need to specify the Kernal size 3 x 3 or 5 x 5.... It would automatically select according to your sigma level.

Grayscale

With the results I got this option is really not necessary. But sometimes you may find it valuable.

Finally, let's try another image of a road.

Amazing post with lots of informative and useful and amazing content. Well written and done!! Thanks for sharing keep posting.

ReplyDelete